Using Slices S3 object storage¶

Introduction¶

S3 object storage (with the same API as Amazon S3 storage) allows users to store and retrieve any amount of data at any time from anywhere on the web. It provides a simple web services interface that can be used to store and retrieve any amount of data. It gives any developer access to highly scalable, reliable, and fast data storage infrastructure which is simple to access.

Key Concepts of object storage¶

- Buckets: A bucket is a container in the storage where data is stored. Think of buckets as the top-level folders to organize your data and control access according to your requirements.

- Objects: Objects are the fundamental entities stored in S3, analogous to files in file systems. Each object in a bucket is identified by a unique, user-assigned key.

Note

In Slices you have one bucket per project (that is shared with all project members)

Interacting with the Slices object storage¶

The object storage can be accessed via the web console, which provides a graphical interface to manage your buckets and objects. However, for more automated and scriptable interactions, the storage provides several other methods:

- Command Line Interface (CLI): an S3 storage CLI tool allows you to perform all of the same actions as the web console and more. This tool provides commands for a broad set of functionality, and is ideal for scripting these commands, which can then be executed in scripts or through direct command line use.

- S3 SDKs: AWS provides SDKs for several programming languages which enable developers to access S3 services programmatically. This is useful for integrating S3 into your applications or for developing complex scripts. e.g. for Python: Boto3 is the Amazon Web Services (AWS) SDK for Python. It allows Python developers to write software that uses S3 storage services. See further for an example.

First use of the Slices S3 storage¶

You first need an account and project on the Slices Portal, see Request A Slices Account.

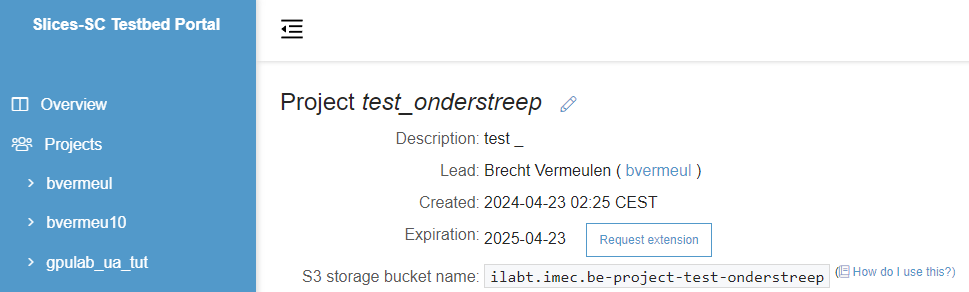

The project page on the portal contains specific information on the S3 storage bucket name for that project, see

screenshot:

(note: this is the same as the project name, started with ilabt.imec.be-project- and with the + and _ replaced by - in the project name)

After that, you can visit the web interface of the Slices storage.

You choose Use Slices-SC account - iam to log in with your Slices account.

The Object Browser view might be empty or might contain existing project buckets.

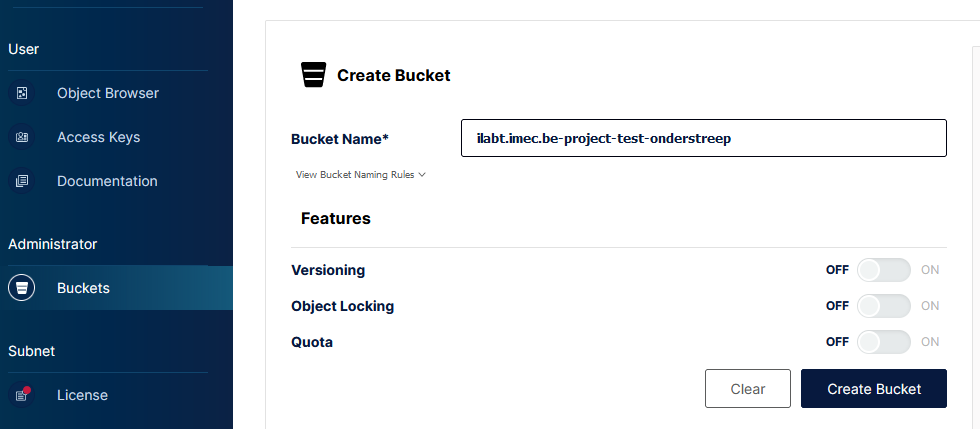

If your project that you want to use is not listed, then click Buckets on the left side, followed by

Create Bucket on the right side.

Fill in the exact bucket name that was shown on the Slices portal and click Create Bucket.

When succesful, you should have no error and see your new bucket in the list.

Note

If you try to create a bucket with an incorrect name, the website will fail silently.

Double check your bucket name if the Create Bucket button seems to have no effect.

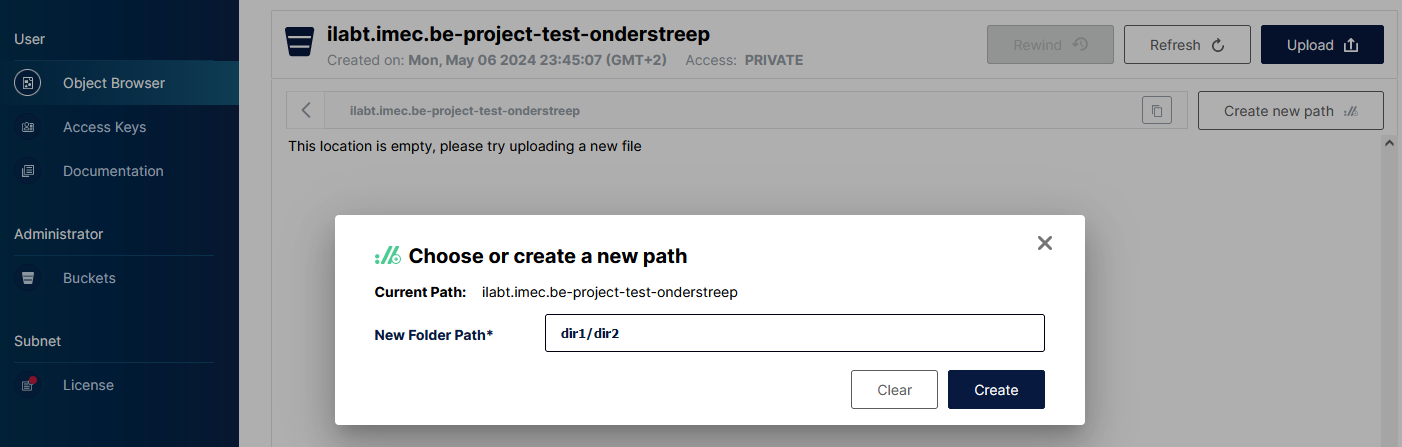

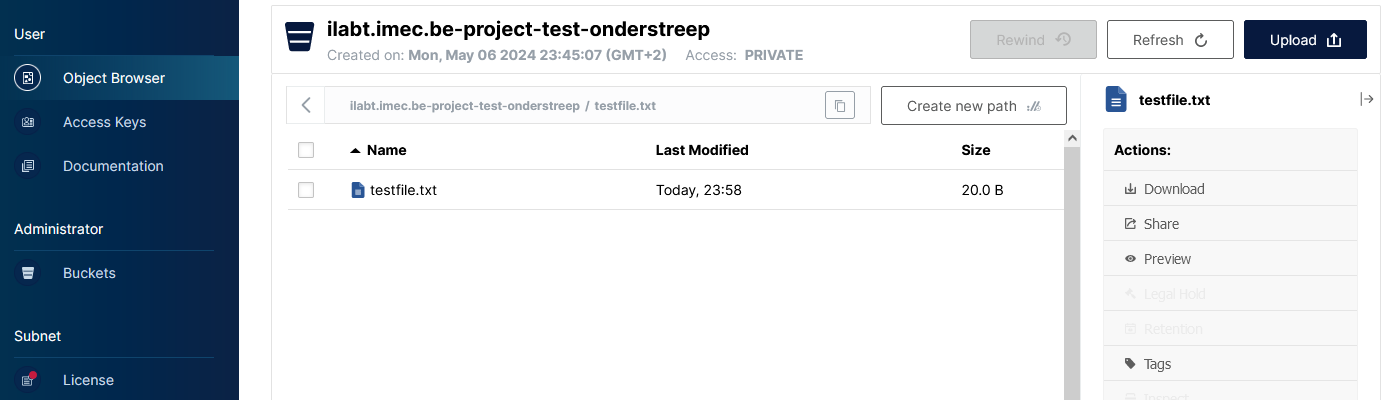

When returning to the Object Browser, you can click the bucket and upload files or create paths.

Accessing the storage with access keys¶

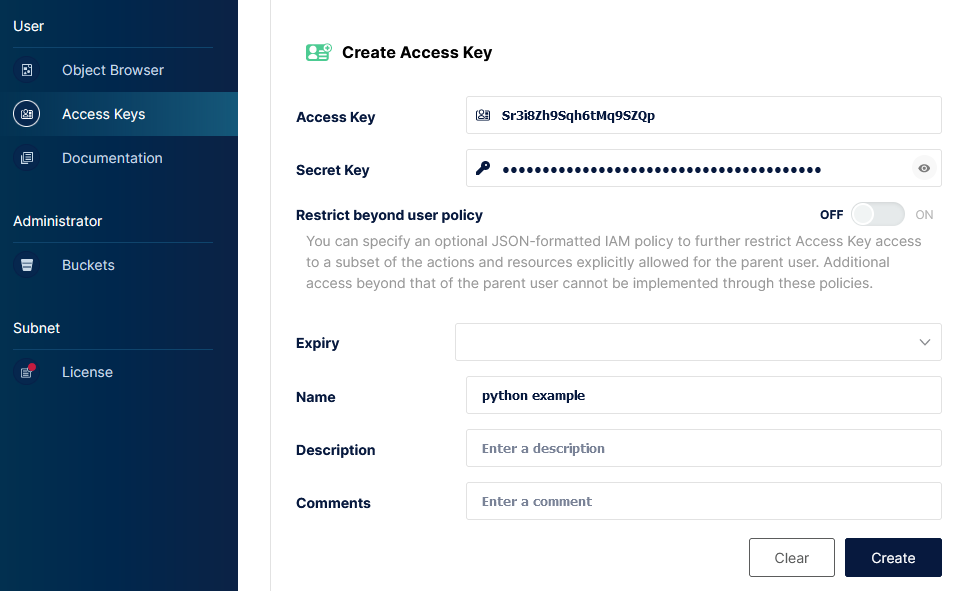

While you are at the S3 storage web console, you can create access keys that you can use with programming languages

or with CLI tools. Click Access Keys on the left, and Create access key on the right.

Fill in a name for the key and click Create. A pop-up window will show the access key and secret key.

Attention

The secret key cannot be retrieved later !

Python example¶

For accessing S3 storage through Python, one can use the boto3 library. This can be installed in various ways,

e.g. pip3 install boto3 when using pip, or apt install python3-boto3 when using APT on Debian/Ubuntu.

An example is shown below how to upload and download a file to a bucket. You need to fill in the right access, secret key and bucket name of course.

#!/usr/bin/env python3

import os

import boto3

from botocore.client import Config

s3 = boto3.resource('s3',

endpoint_url='https://s3.slices-be.eu',

aws_access_key_id='Srxxxxxxxxxxxxxx',

aws_secret_access_key='xxxxxxxxxxxx',

config=Config(signature_version='s3v4'))

# Upload a file from local file system '/users/bvermeul/testfile.txt'

# to bucket 'ilabt.imec.be-project-test-onderstreep' with 'testfile.txt' as the object name.

s3.Bucket('ilabt.imec.be-project-test-onderstreep').upload_file('/users/bvermeul/testfile.txt','testfile.txt')

print("Uploaded '/users/bvermeul/testfile.txt' to 'testfile.txt'.")

# Download the object 'testfile.txt' from the bucket 'ilabt.imec.be-project-test-onderstreep'

# and save it to local filesystem as /tmp/testing.txt

s3.Bucket('ilabt.imec.be-project-test-onderstreep').download_file('testfile.txt', '/tmp/testing.txt')

print("Downloaded 'testfile.txt' as '/tmp/testing.txt'.")

When you run this:

python upload_download.py

Uploaded '/users/bvermeul/testfile.txt' to 'testfile.txt'.

Downloaded 'testfile.txt' as '/tmp/testing.txt'.

Of course, if you check with the Object Browser, you will see that the file has been added.

Using the CLI¶

You can of course also use a CLI tool, e.g. MinIO Client. You can download installers from https://dl.min.io/client/mc/release/. In the below commands, fill in your access key and secret key.

wget https://dl.min.io/client/mc/release/linux-amd64/mcli_20240429095605.0.0_amd64.deb

dpkg -i mcli_20240429095605.0.0_amd64.deb

mcli alias set slices https://s3.slices-be.eu Srxxxxxxxxxxxxxx xxxxxxxxxxxx

mcli du slices

116KiB 2 objects

mcli ls slices

See the list of commands for all relevant commands. Do not forget to add slices as target.

Questions and support¶

E-mail us at helpdesk@ilabt.imec.be